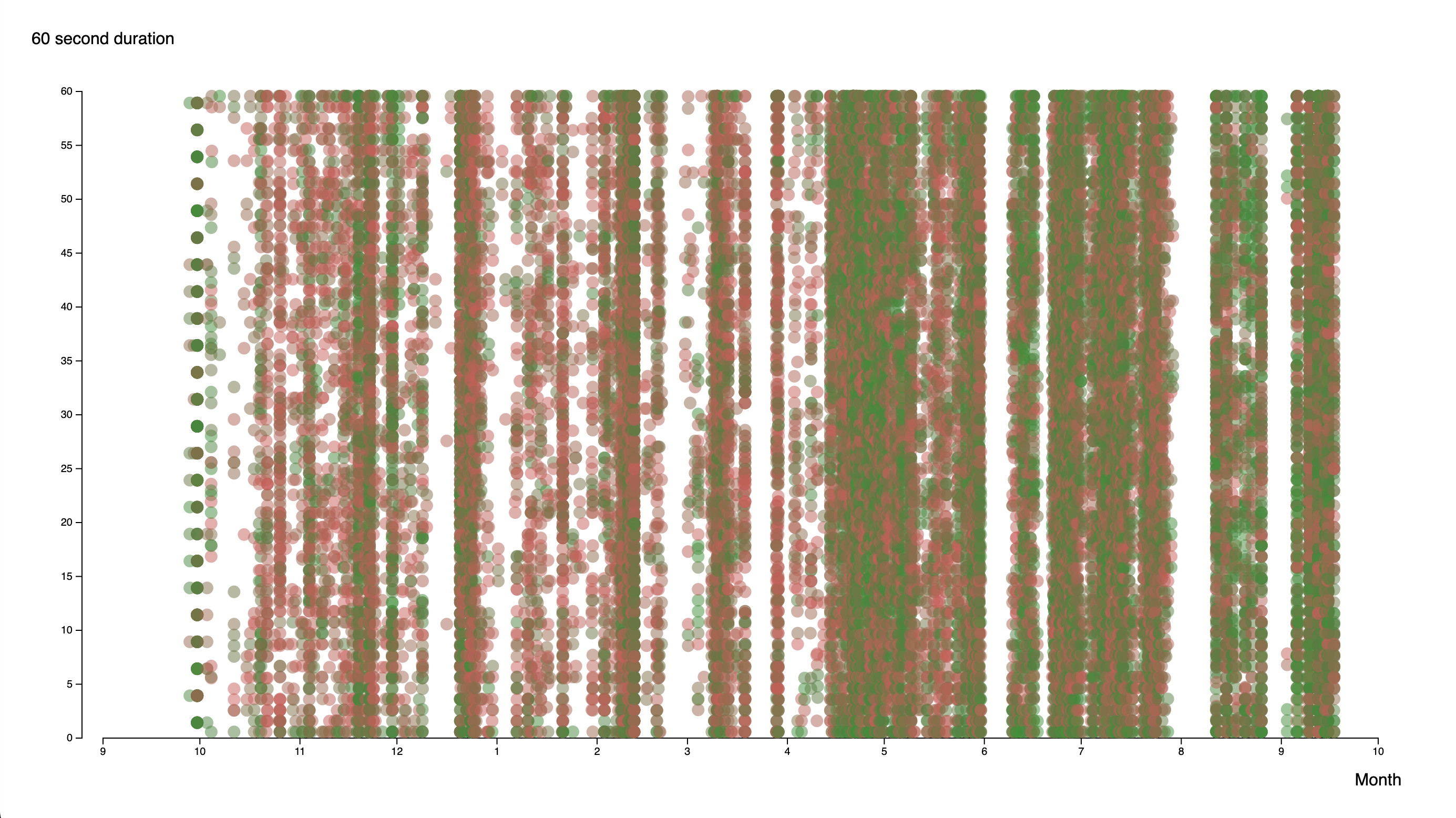

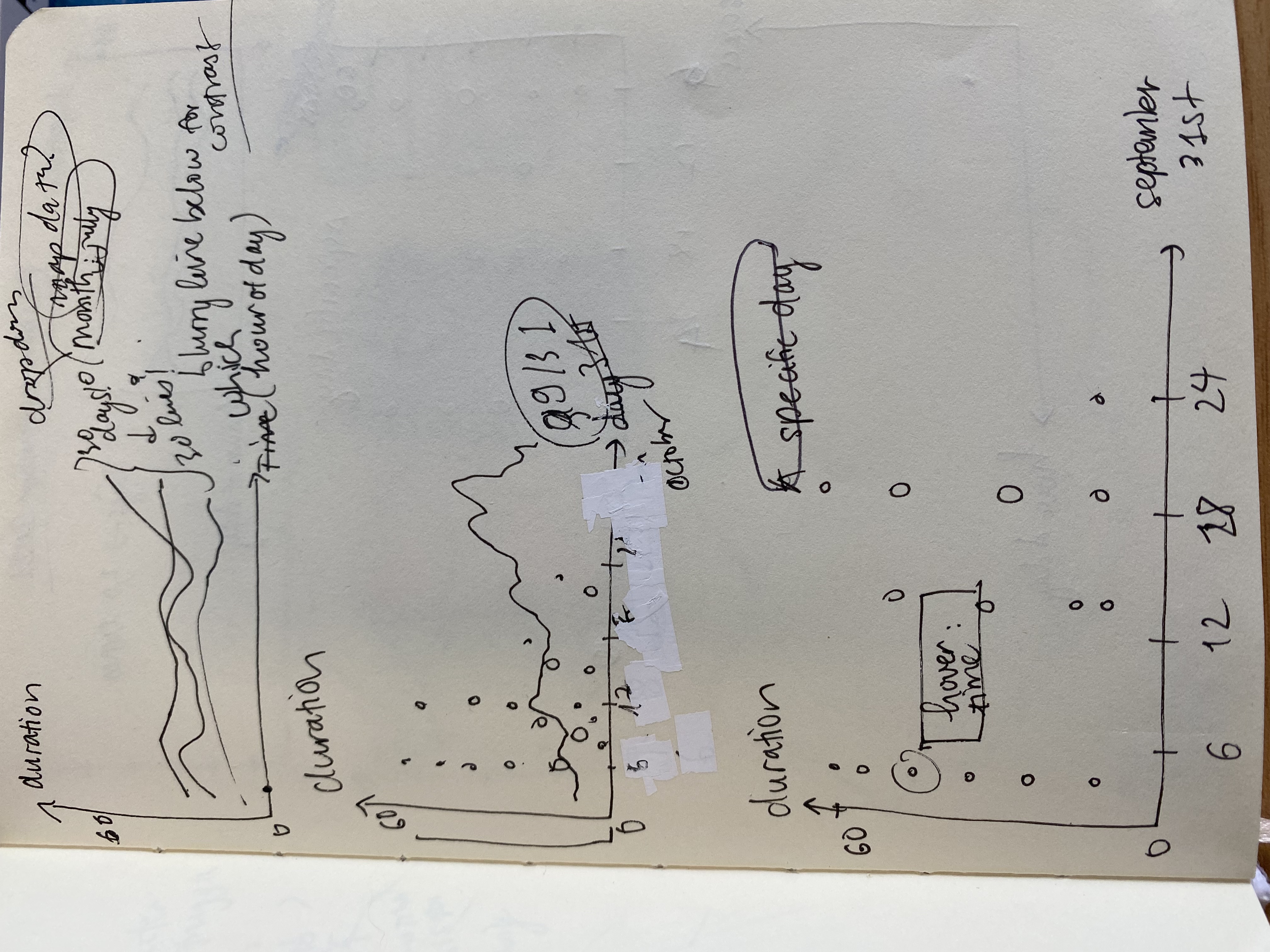

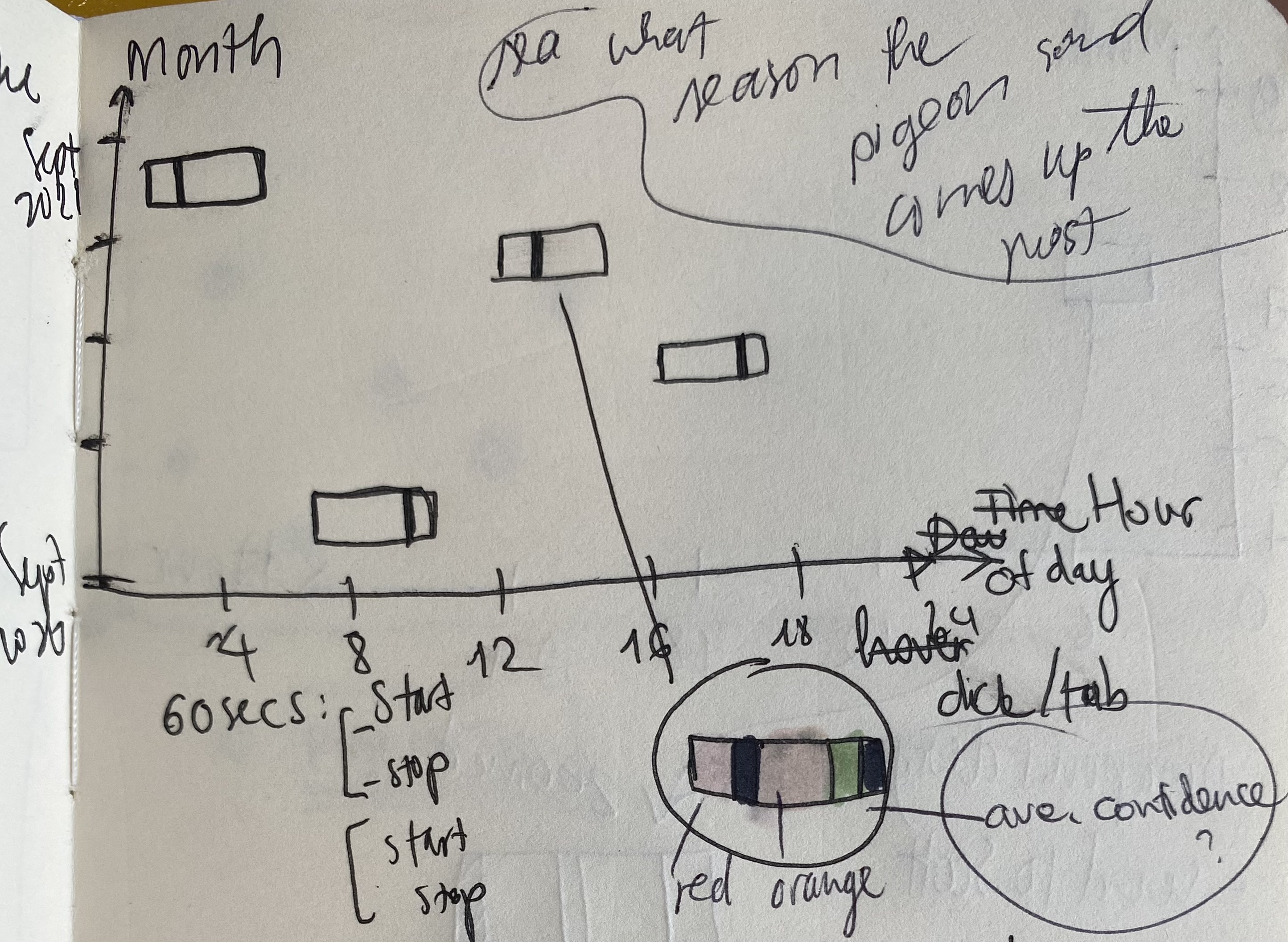

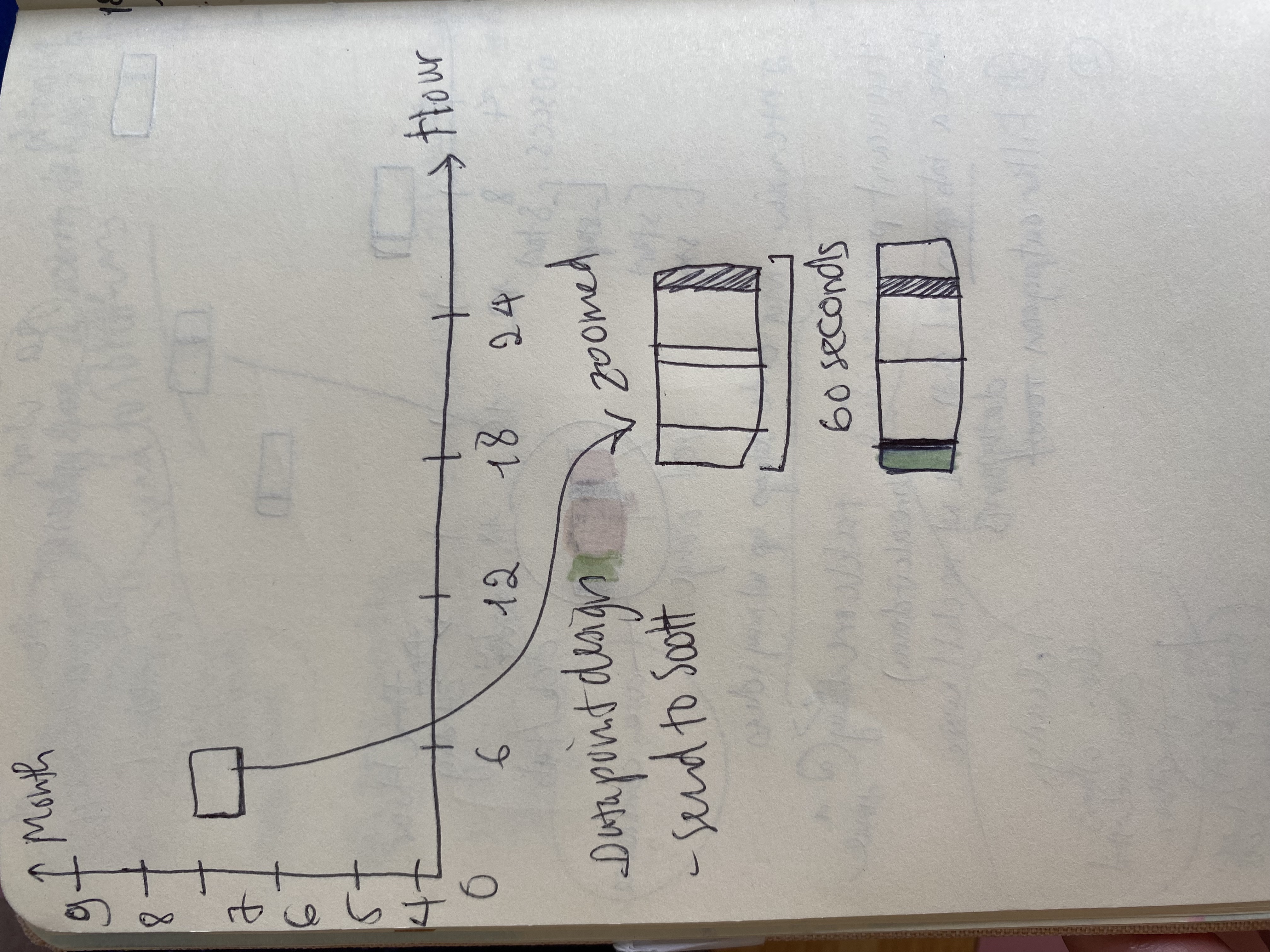

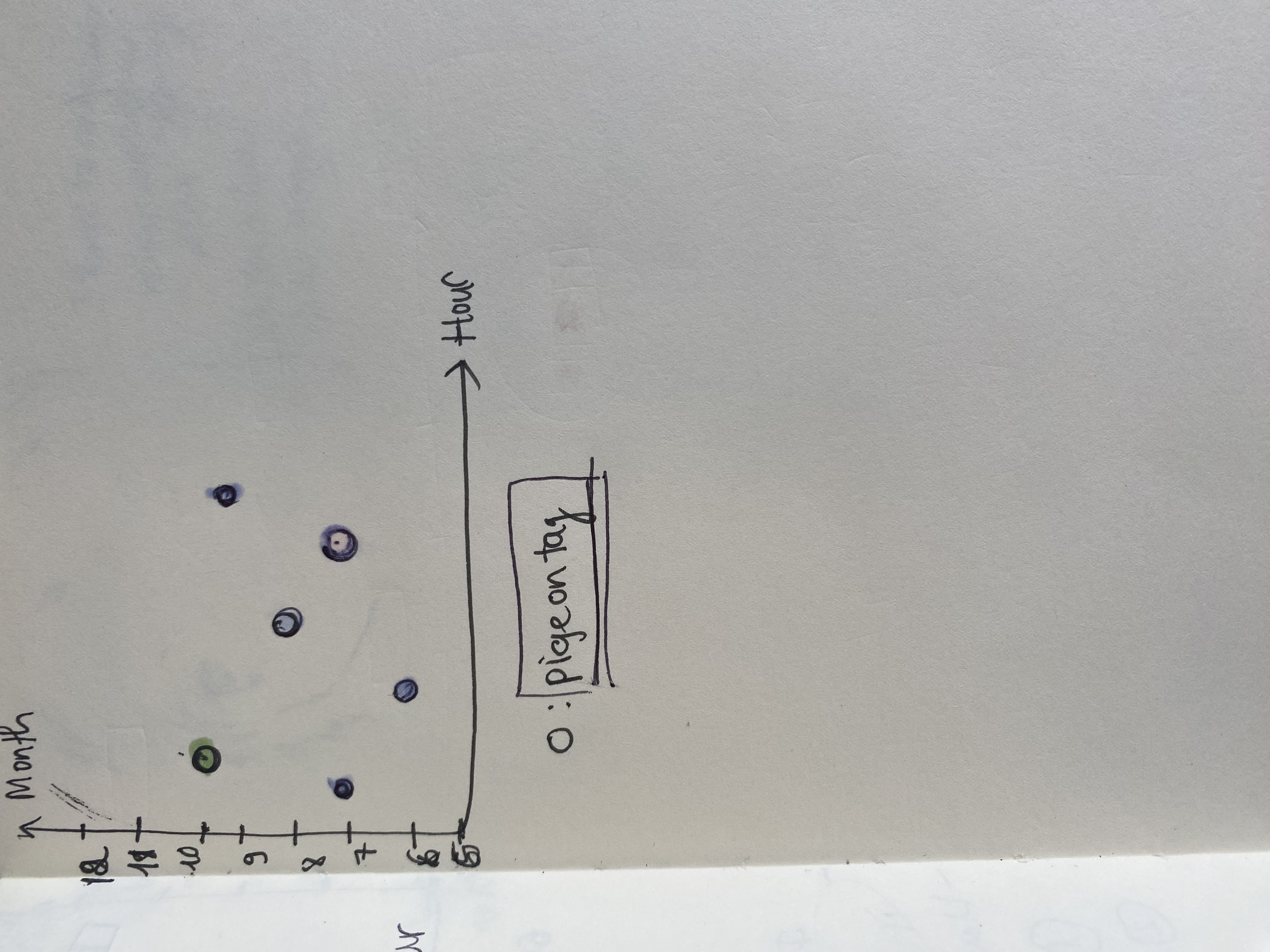

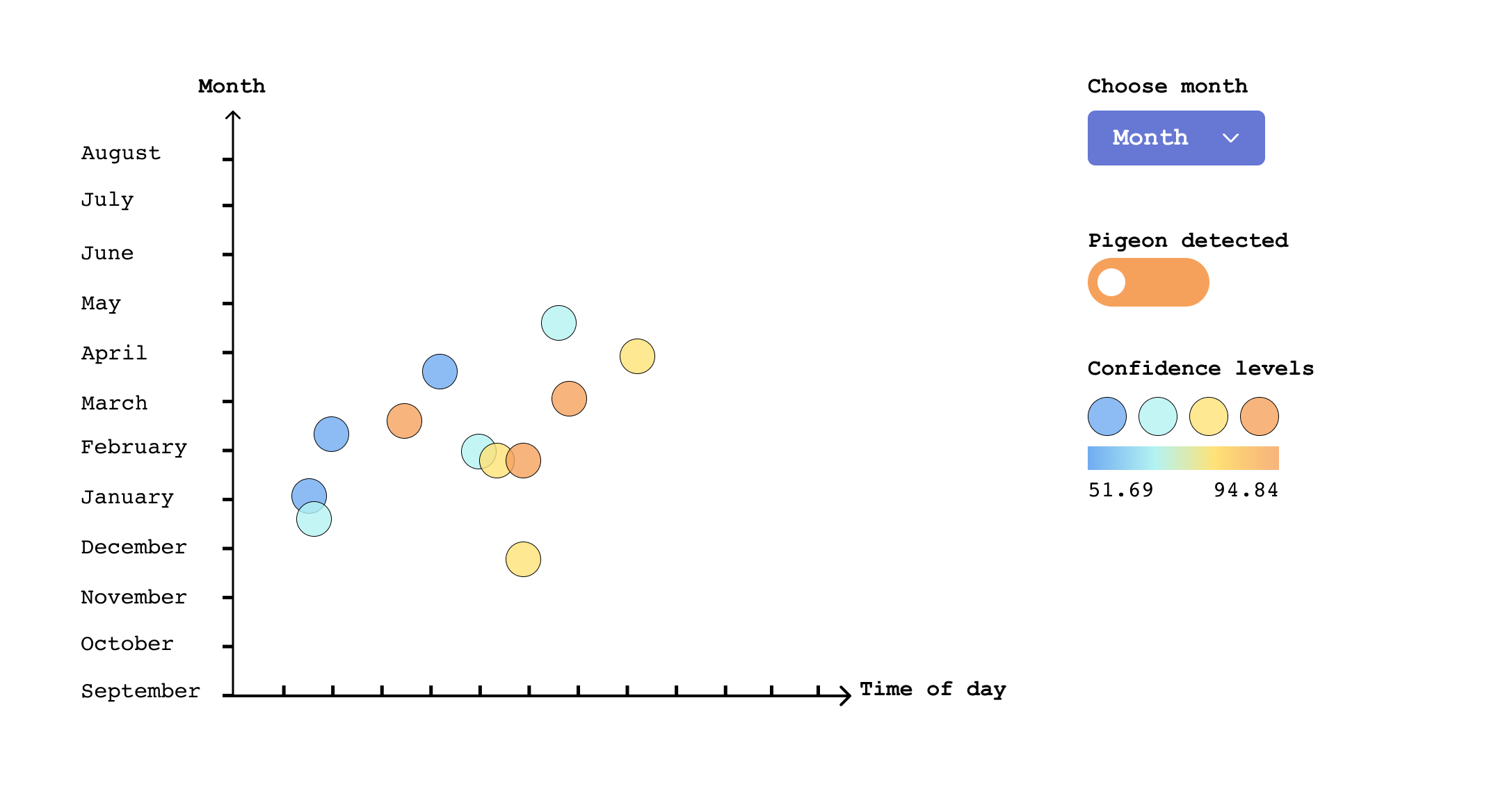

The challenge of the dataset is to visualize a short

timestamp observation among a long period of time. The data consists of over 3000 observations of

60-second durations collected at each minute-length timestamp. The timeline of the dataset ranges

from

September 2020 to September 2021.

One observation consists of timestamp, location (all

are from the same hydrophone area), and 60 second duration in the

predictions

property.

Toggle to see data point

[{

"id": "464e0e81-ba53-4894-bf44-fa65b338d826",

"modelId": "FastAI",

"audioUri": "https://livemlaudiospecstorage.blob.core.windows.net/audiowavs/rpi_bush_point_2021_04_16_07_19_14_PDT.wav",

"imageUri": "https://livemlaudiospecstorage.blob.core.windows.net/spectrogramspng/rpi_bush_point_2021_04_16_07_19_14_PDT.png",

"reviewed": true,

"timestamp": "2021-04-16T14:19:14.629478Z",

"whaleFoundConfidence": 64.46377411484718,

"location": {

"id": "rpi_bush_point",

"name": "Bush Point",

"longitude": -122.6039,

"latitude": 48.03371

},

"source_guid": "rpi_bush_point",

"predictions": [

{

"id": 0,

"startTime": 44.7457627118644,

"duration": 1.0169491525423728,

"confidence": 0.5313302651047707

},

{

"id": 1,

"startTime": 53.89830508474576,

"duration": 1.0169491525423728,

"confidence": 0.6244806498289108

},

{

"id": 2,

"startTime": 55.932203389830505,

"duration": 1.0169491525423728,

"confidence": 0.552818275988102

},

{

"id": 3,

"startTime": 56.94915254237288,

"duration": 1.0169491525423728,

"confidence": 0.8699217736721039

}

],

"_rid": "cp0tAOsNIHcsBgAAAAAAAA==",

"_self": "dbs/cp0tAA==/colls/cp0tAOsNIHc=/docs/cp0tAOsNIHcsBgAAAAAAAA==/",

"_etag": "\"0400fec6-0000-0800-0000-60799ddd0000\"",

"_attachments": "attachments/",

"SRKWFound": "no",

"comments": "sea bird",

"dateModerated": "2021-04-16T14:23:25Z",

"moderator": "live.com#dbaing17@gmail.com",

"tags": "",

"_ts": 1618583005

}]

With observations ranging from September 2020 to September 2021, to visualize 60-second duration in

a

long period of time (even in one day, the data points might already have overlapped), I focus my

goal on

making the visual presentation as clear as possible, but still provide enough information of the

detections.