Winged - leap motion drawing app

Main roles

Tools used

Figma, Miro and paper prototyping

Problem statement

Disabilities have long had negative influence on adults emotionally and physically

There are a lot of disabled artists out there who want to be able to draw. However, most of them use other parts of their bodies (such as their mouths and legs), which will greatly cause pain and discomfort.

Based on the concept of art therapy, art is a means for users to feel the freedom to express themselves and create something new. It is especially helpful when users are bound by illnesses and feel less in control of their health.

“Art provides unlimited possibilities for personal, academic, and professional success.” - Christopher and Diana Reeve Foundation. Winged promotes inclusivity in the arts and help artists achieve their goals, either for leisure or serious career pursuit

Audience

Our audience are those with arm or hand disabilities, unlimited in age or gender

The testing audience was those without arm and hand disabilities or only minor injuries, as consent and safety during Covid-19 was a concern.

Challenges

There has been research on a software that utilizes the leap motion controller to enable users to draw using hand gestures in midair (researched on by Dr. Chris Creed at University of Birmingham), but the interface in the app was too complicated for users to navigate.

Winged's main challenge is to create an interface that is easy for navigate for different physiological needs.

How Might We (HMWs)

- HMW help the users navigate the interface with minimal discomfort and muscle pain?

- HMW create the user interface (UI) as minimalistic as possible?

- HMW make the options and the icons easier to click?

- HMW move the canvas to adjust to the users’ hand placement?

- HMW create independence for the users when they use our app?

- HMW help users boost their creativity, and more importantly, their self-esteem?

In this case study, I will propose a solution for HMWs 1-4, and leave 5) and 6) for further research and future iterations.

Similar to Dr. Creed's research, we utilize the leap motion controller as a tool for users to draw.

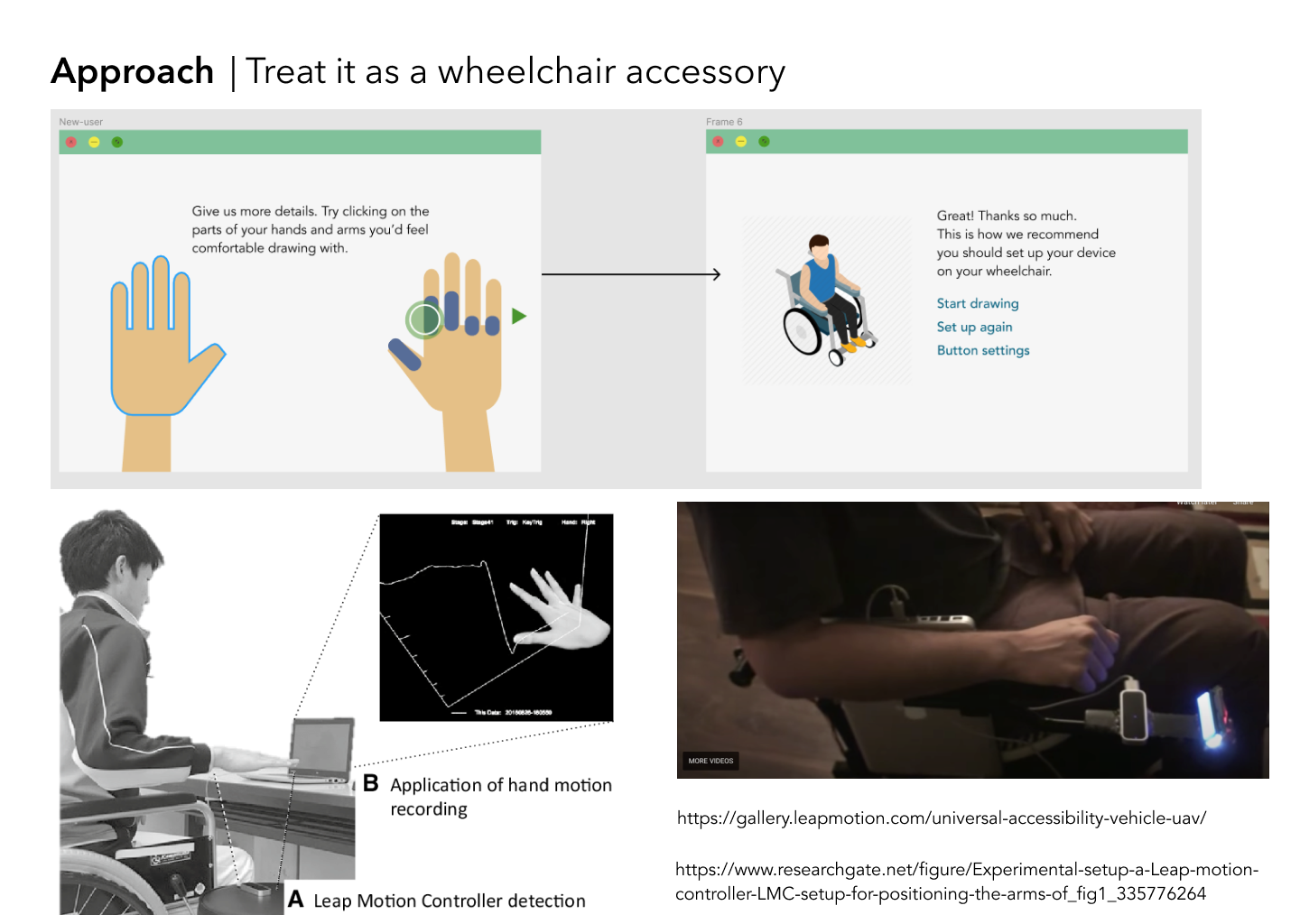

Approach

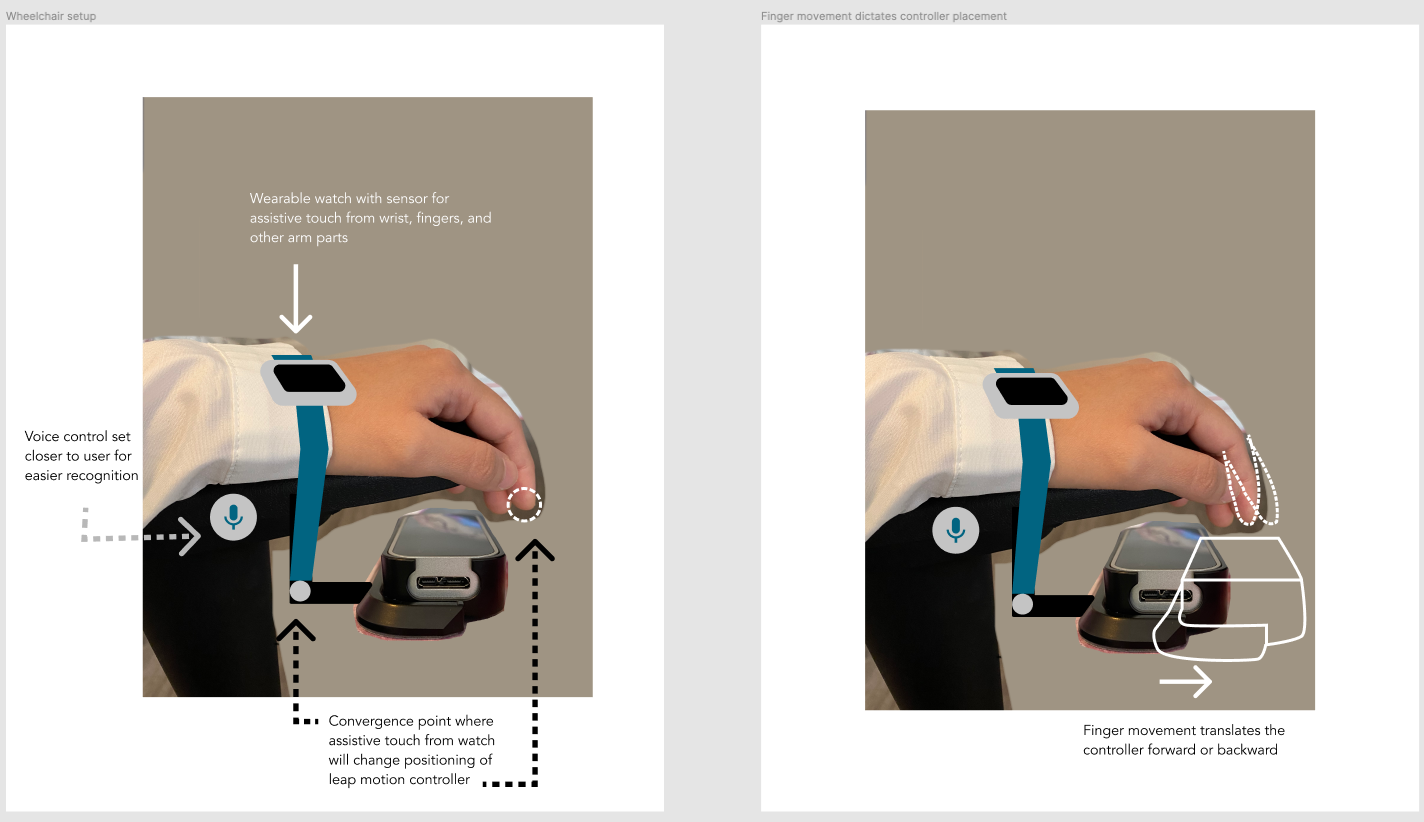

Our approach to addressing the HMWs are hand detection, recommending a suitable wheelchair setup, voice command, and a minimalistic design

- Minimalist interface

- Hand gestured detected by the leap motion controller

- Optimized wheelchair setup of the leap motion controller for each user

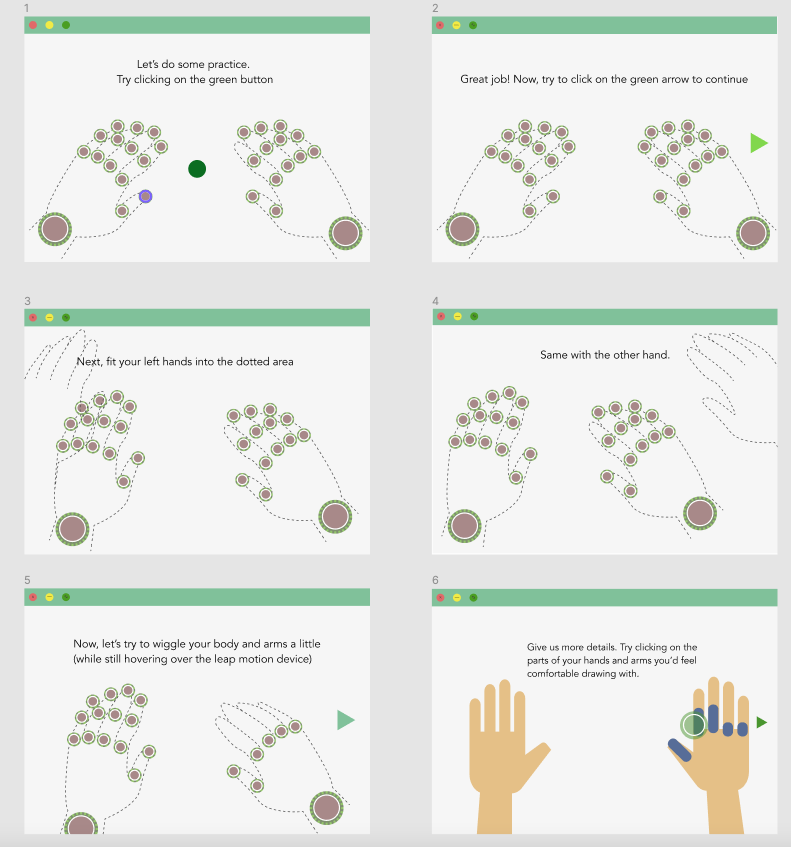

- Detect users’ hand parts to customize to physiological structures

- Voice command to minimize hand gestures and thus pain

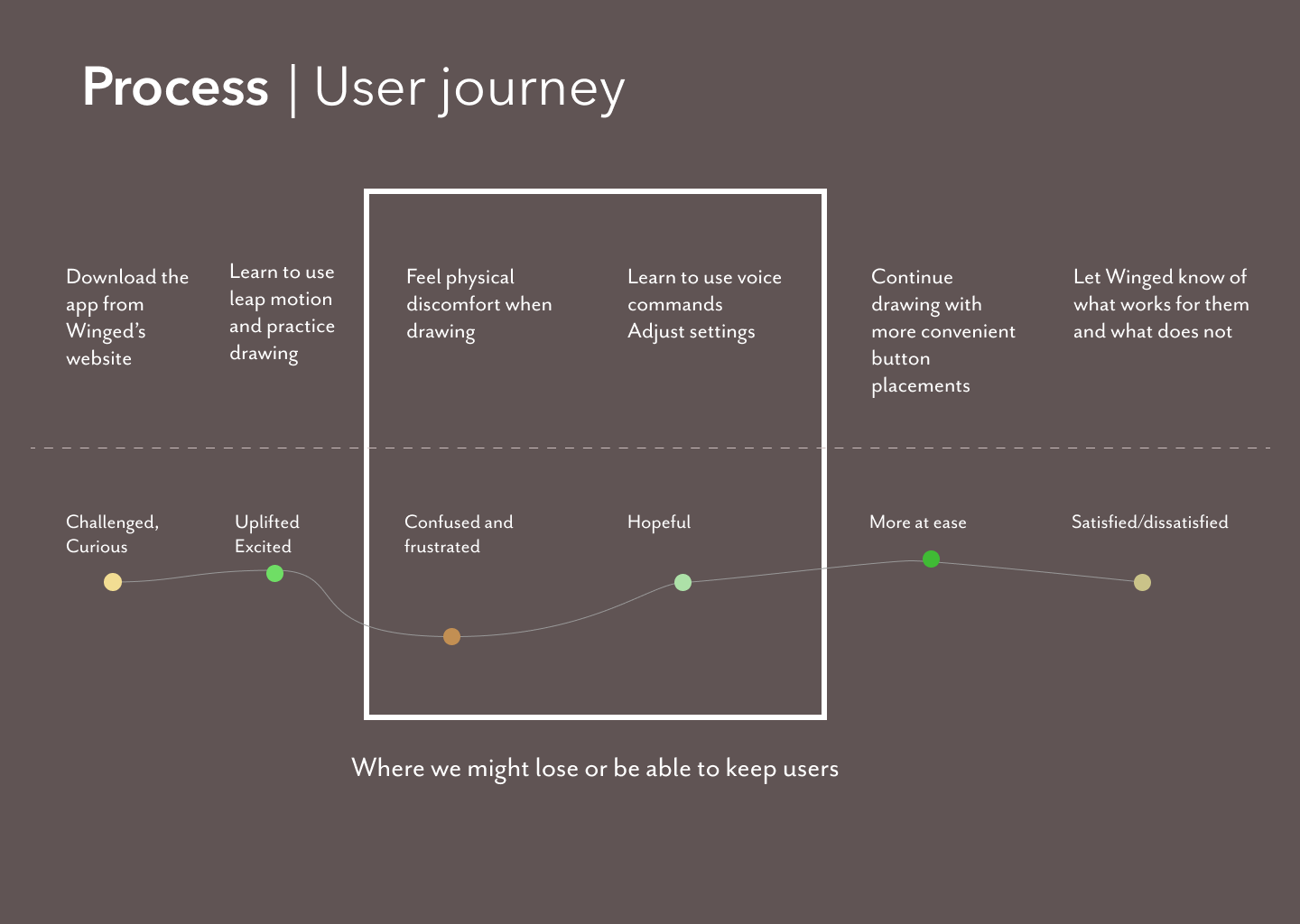

User Journey

The main pain point we detect from the user journey is when users get used to the app.Much physical discomfort in the beginning would turn the users away from using the app, so we want the onboarding phase goes as smooth and accommodating as possible

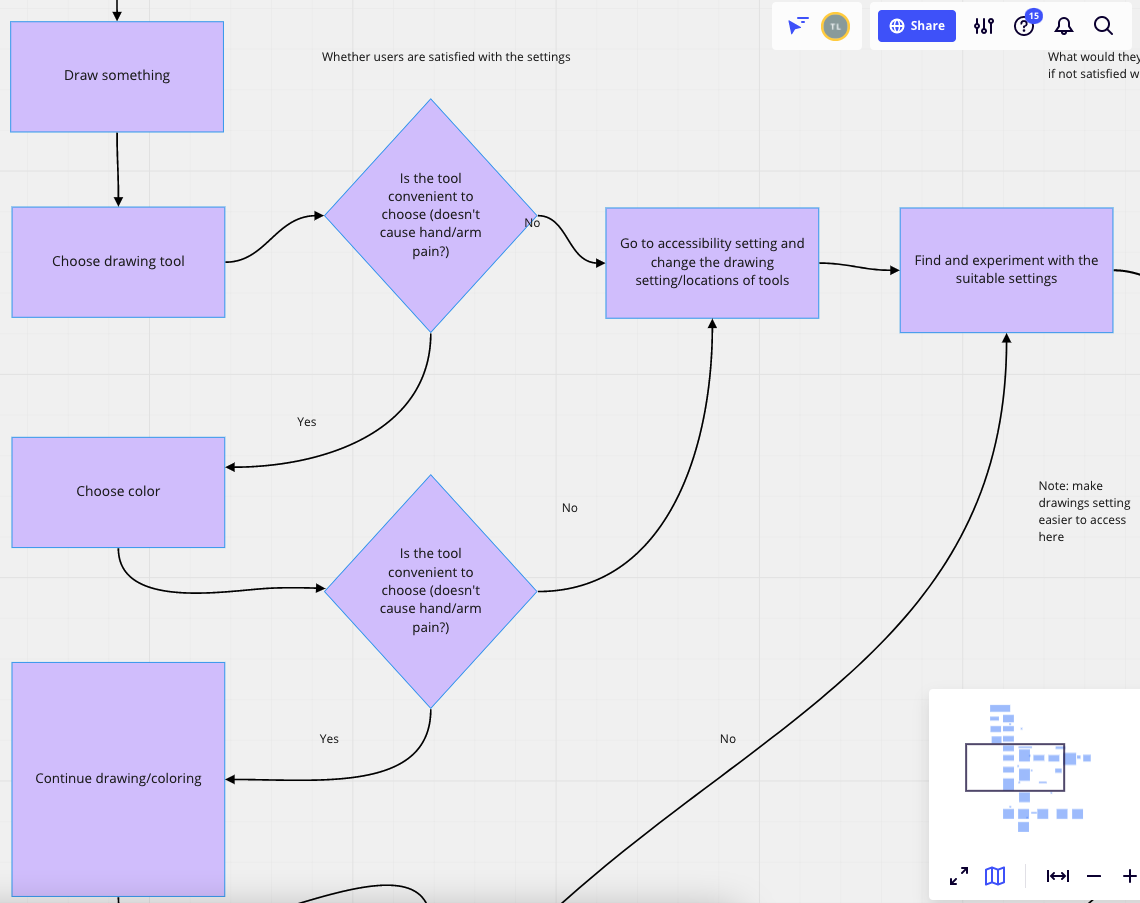

User flow

I created a user flow and identify where we can solve the pain point for users. The highlight of the userflow where the solution takes place is the constant feedback loop in which users can go back and forth and adjust the settings suitable to their needs.

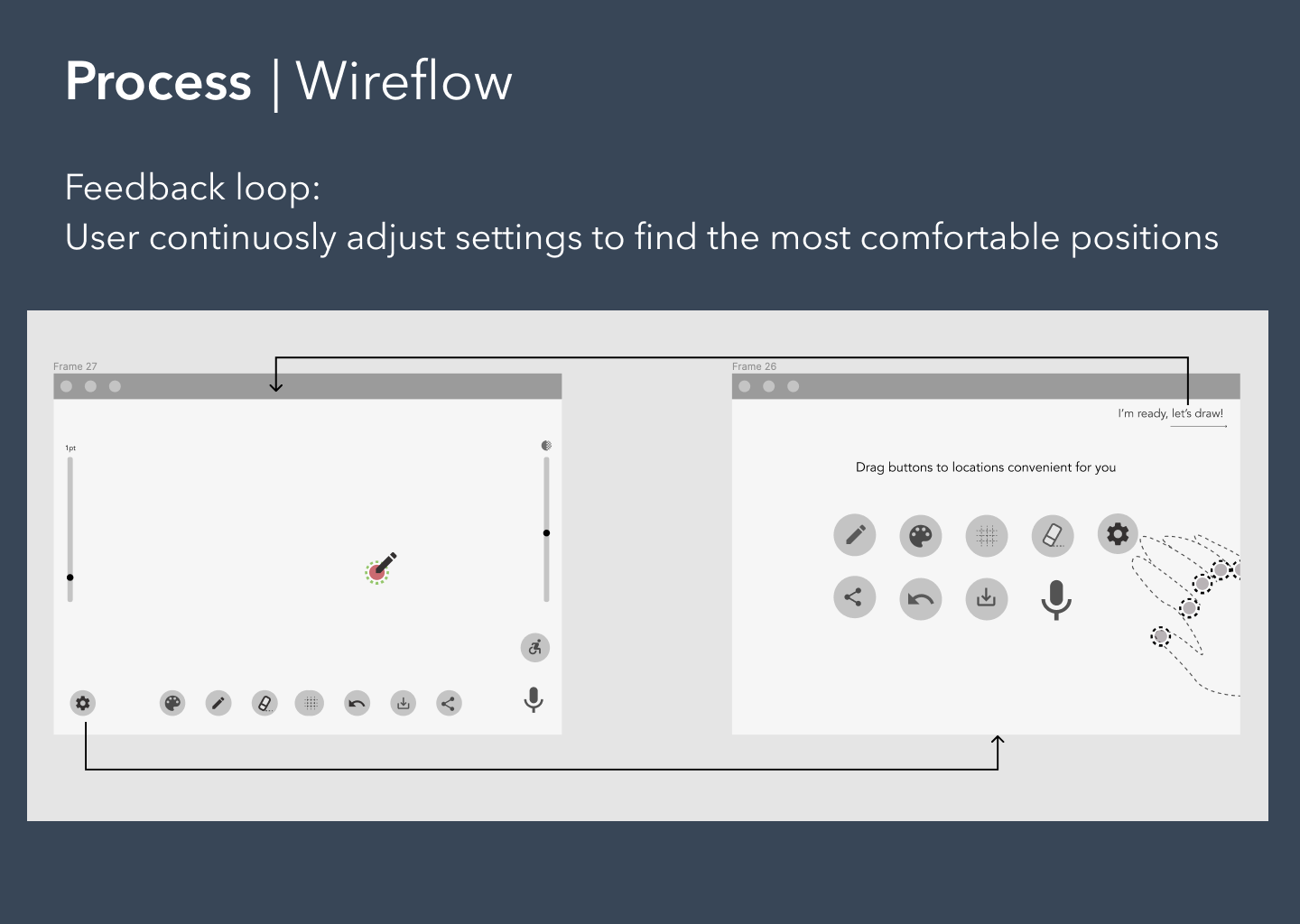

Wireflow

To visualize the interface I want to build, I first created a wireflow to imagine how users would adjust settings. But these questions arise: 1) Can we recommend a setting for users during onboarding? and 2) How do we do so for users with various hand disabilities?

Arising issues

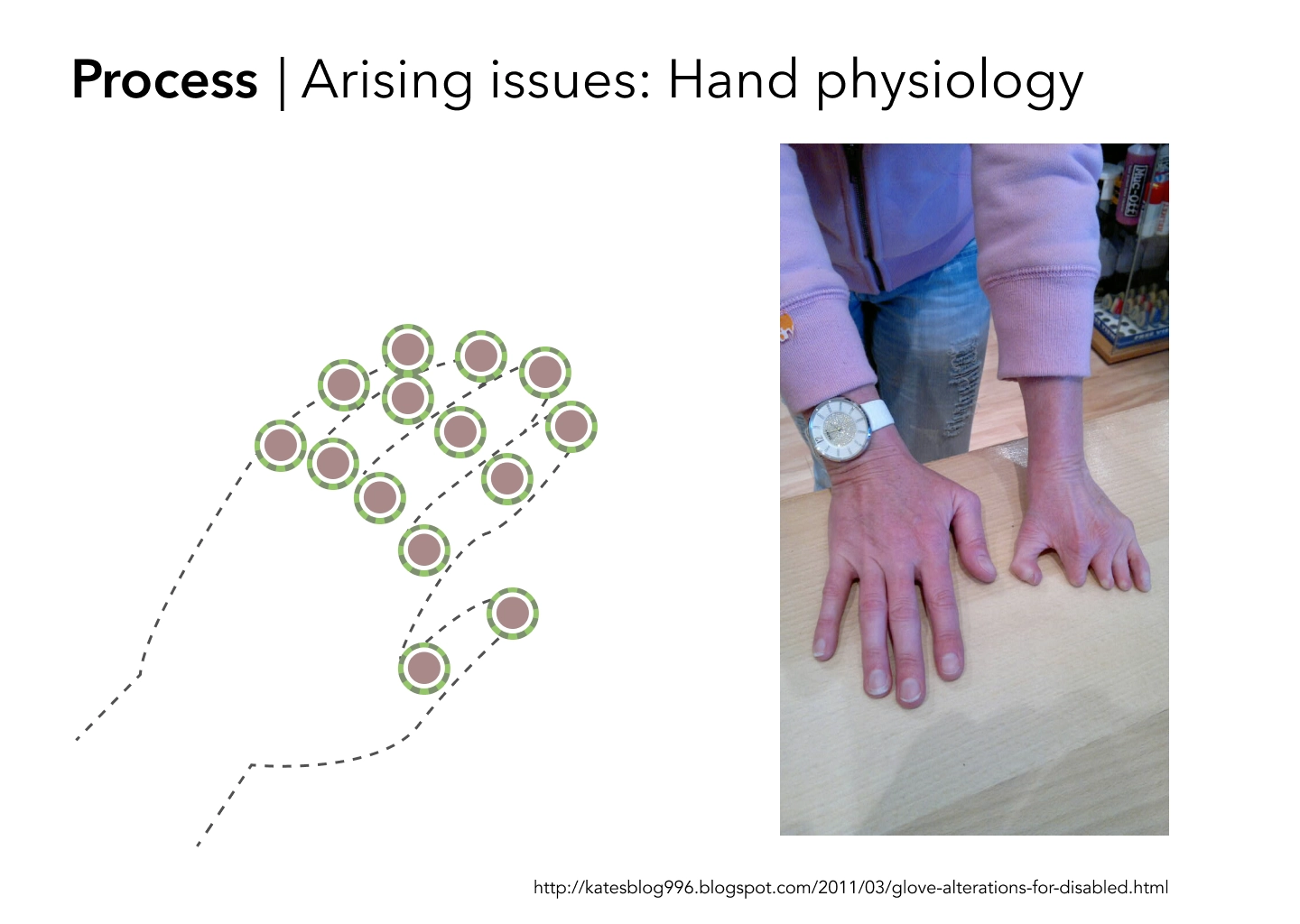

One arising issue I encountered while designing the interface is that different hand physiologies will need different accommodations and setups. When the users' hands or arms are not exactly the same as the hands with the full number of joints and parts, we would have to consider ways to include them in our app as much as possible.

One example of user's hand physiology compared to a hand with full joints

One example of user's hand physiology compared to a hand with full joints

Another arising issue is how to perform user testing and design iteration. As our app is interactive in the 3D space, we cannot simply test it as with a clickable prototype. It is hard for users to imagine how they would interact with the app on the 2D space, and spending too much time coding an interface that is way too elaborate an d subject to many drastic changes would cost a lot of time.

Instead of going from wireframe to digital prototyping and then coding, for this app, putting each fidelity level into code will be necessary to provide the most genuine user testing experience.

Solutions

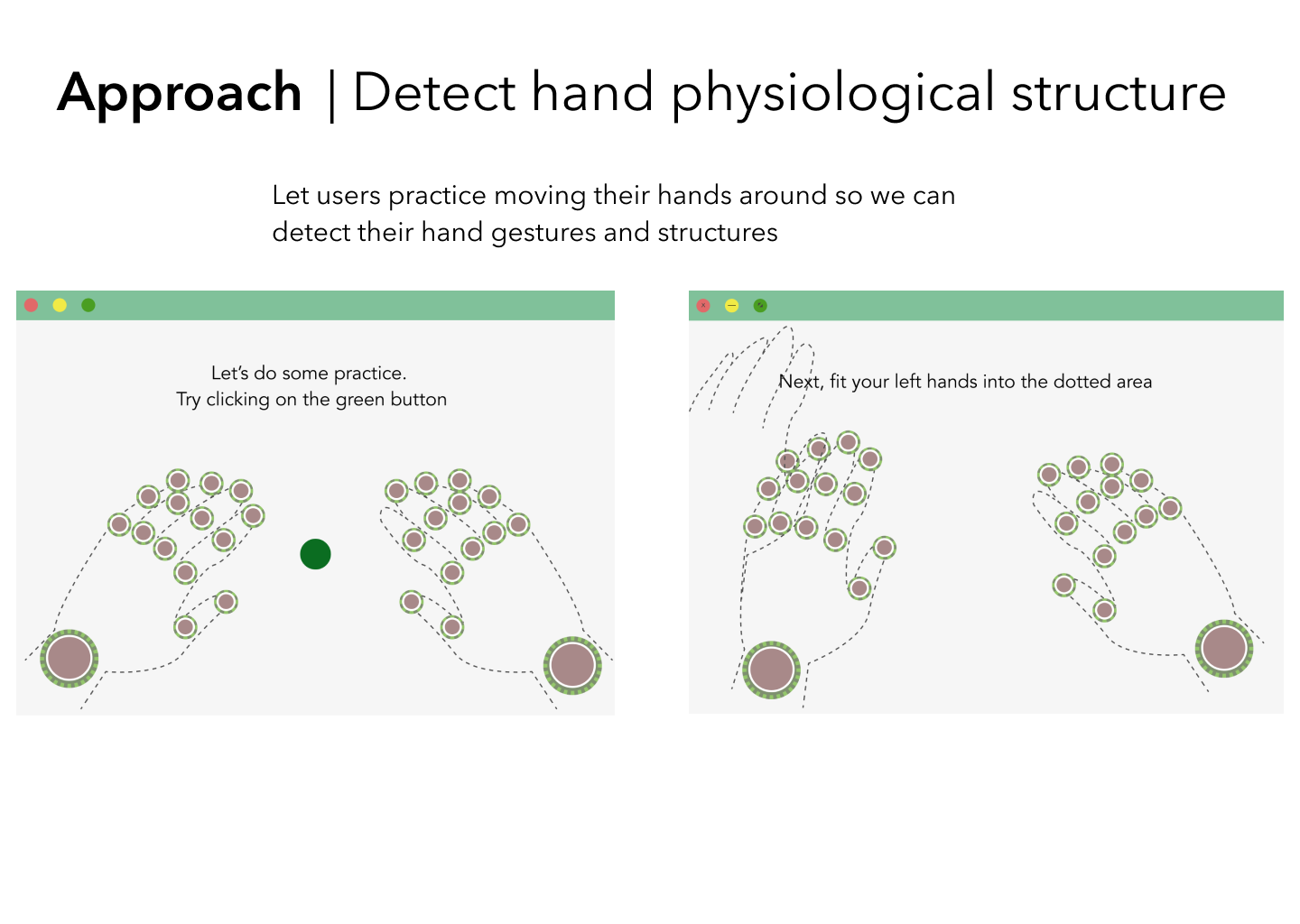

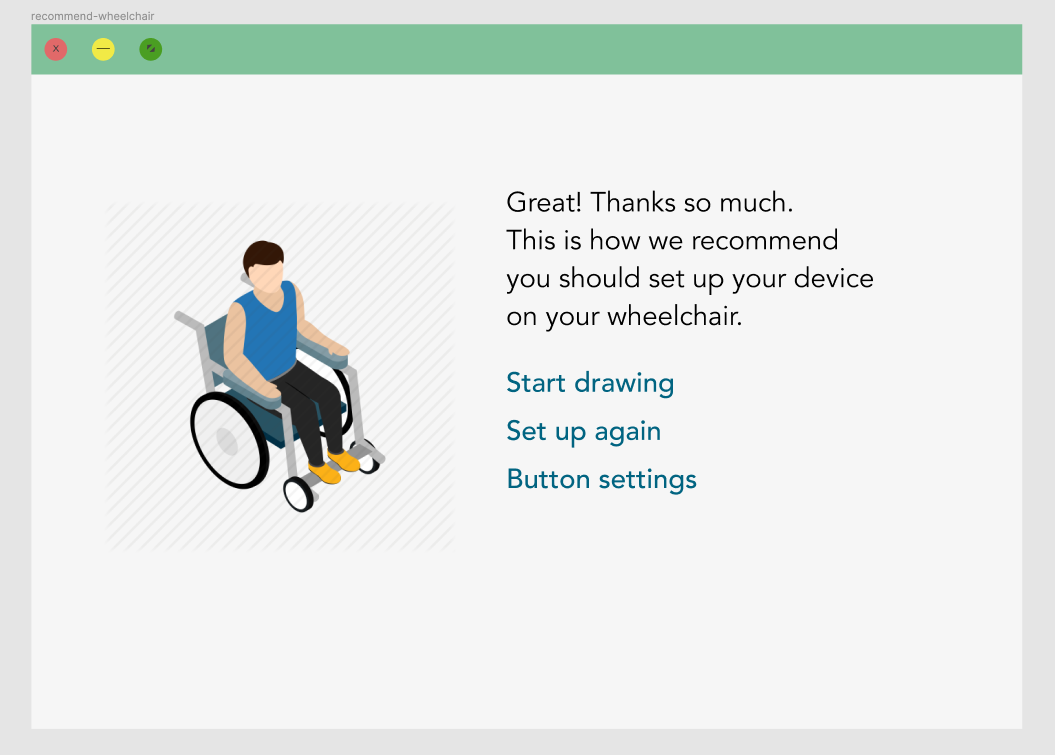

1. Recommend the best device setup for users based on their hand structures

My proposed solution is that we should have the onboarding screens to detect users' hand joints and parts that are ready for drawing.

By having the users completing some simple exercises (clicking on a button, moving their hands to a designated location), we might be able to detect which parts of their hands users are comfortable with using and moving.

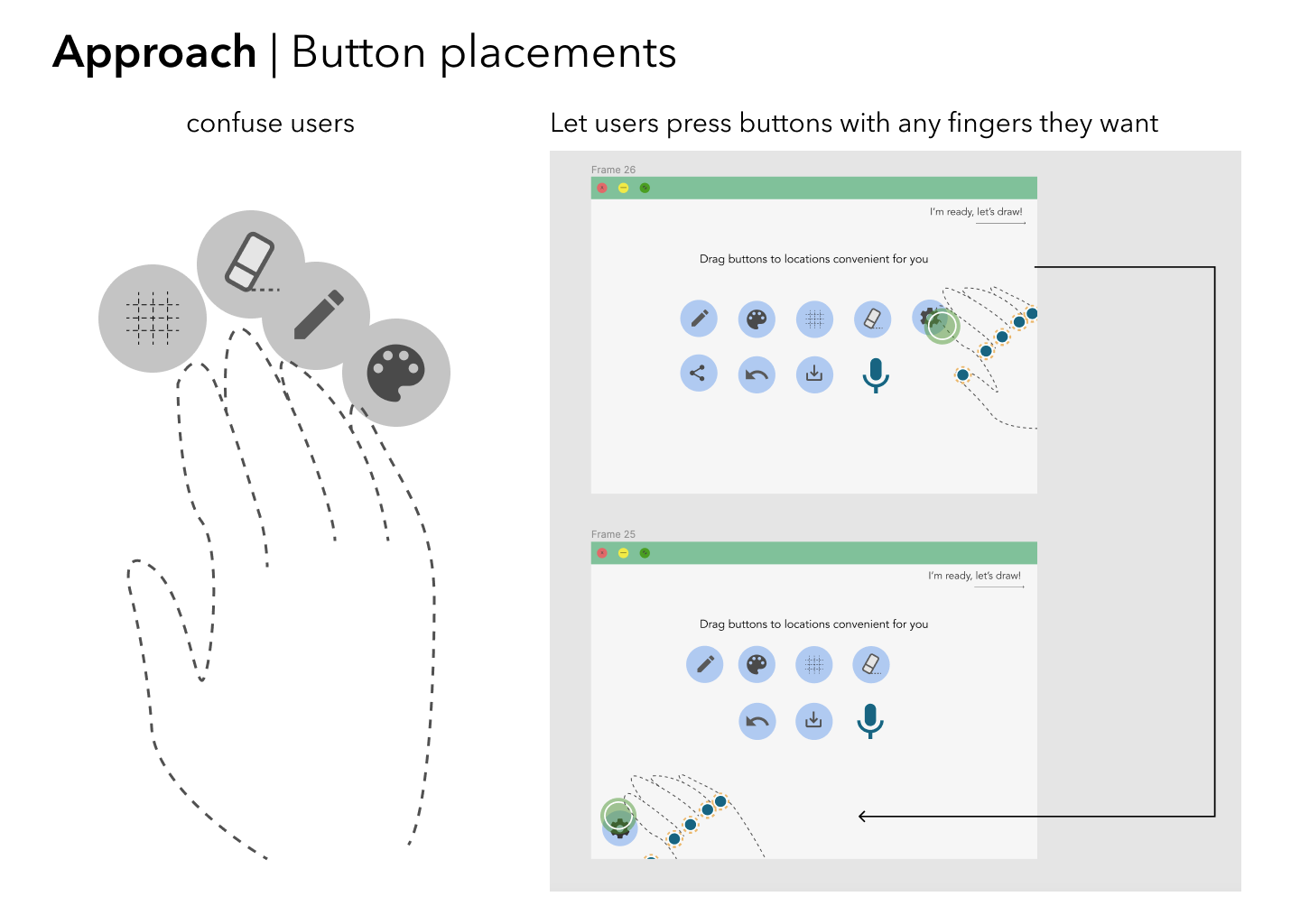

2. Give users freedom to setup the interface at their convenience

While doing user testing, I realized that having the buttons placed on users' fingertips are not at all optimal. At first, I only took in consideration users who have paralyzed arms but can still move their fingers. However, this approach, even with this small subset of users, is confusing in and of itself. It was not clear to the participants I tested with that the buttons are on the tip of their fingers. Moreover, this design has a huge flaw, in which it does not provide inclusivity for various disabilities.

Many user testing participants commented that it would be easier for them to just use whichever hand or finger they want to click on buttons and navigate the interface. We give users freedom to customize the interface and interact with the app at their convenience, and still have our recommendation to support them.

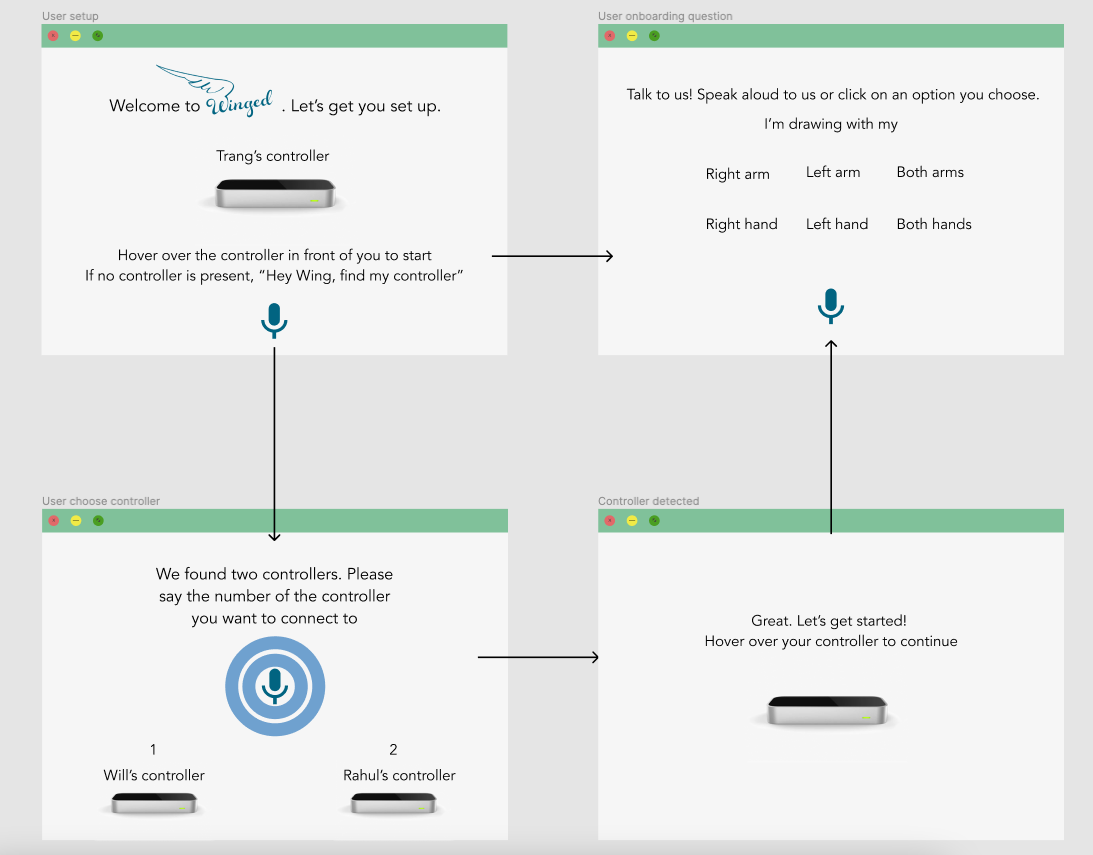

3. Voice commands for users to set up devices and choose drawing options

When users start out using the app, we would not know about users' disabilities and how their hands or arms or structured. To limit this initial bulkiness for users, we could guide them through using voice commands during setup to let us know about their disabilities and choosing a suitable device.

Not just that, voice command also aides users to make drawing options without having to physically click on buttons. They can tell us which color they want to pick or which brush they would use, not dissimilar to Siri's usage.

4. Suitable wheelchair setup to minimize users' movements

To adapt to different users' ergonomic conditions, we need to think about a setup that is adjustable

for

different disabilities.

I asked one user (who has no disabilities) that "how would you go about using a drawing app if your

right arm is injured by rockclimbing?" (she loves rockclimbing and is righthanded). "I would use my

left arm to move the paper around and my right arm to draw, so my injured arm does not have to move

around as much," she answered.

I asked one user (who has no disabilities) that "how would you go about using a drawing app if your

right arm is injured by rockclimbing?" (she loves rockclimbing and is righthanded). "I would use my

left arm to move the paper around and my right arm to draw, so my injured arm does not have to move

around as much," she answered.

This answer inspired the fourth solution, which is setting up the leap motion controller on a wheelchair at a suitable location.

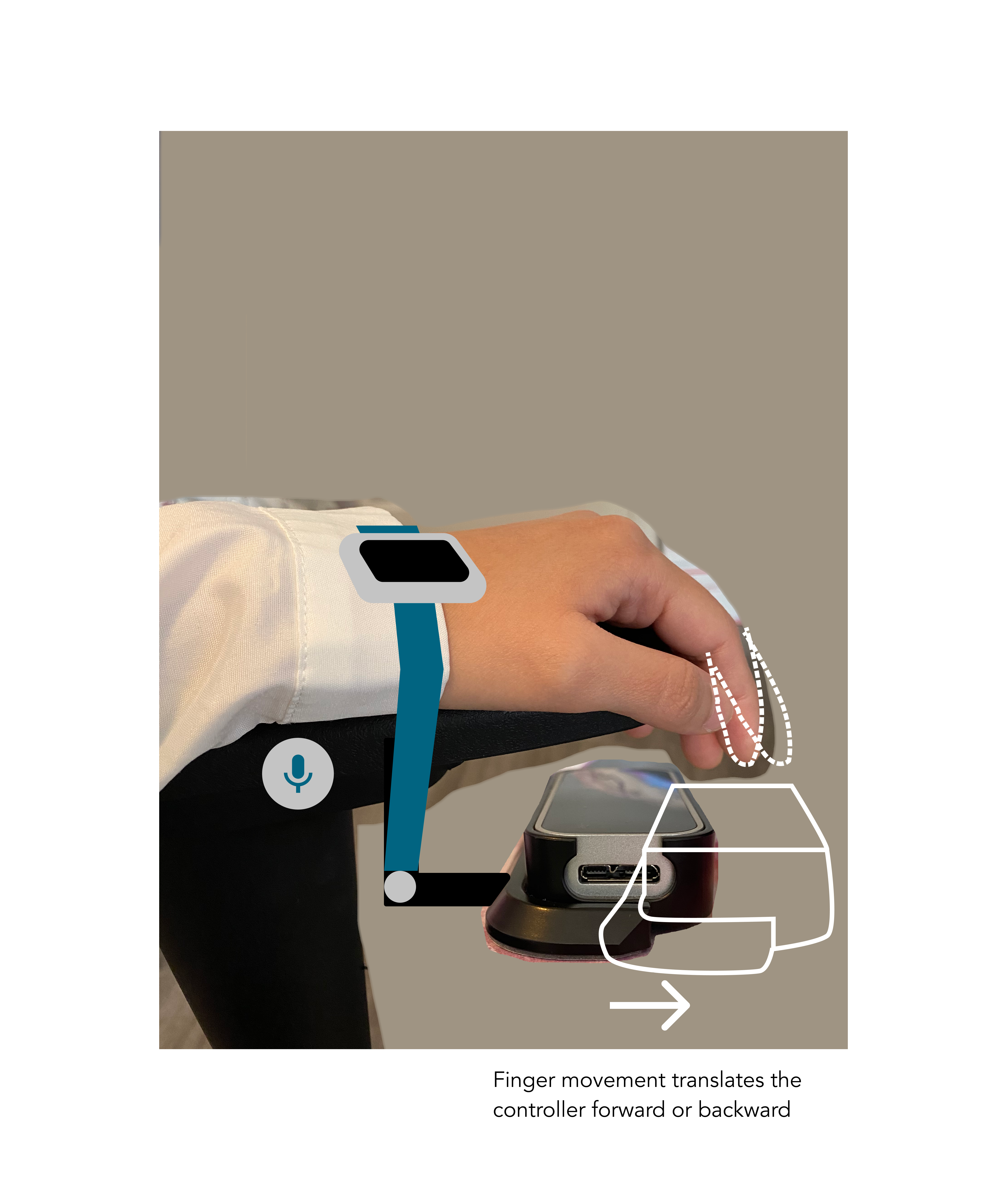

While playing around with the leap motion controller, I realized that as I moved my controller around with one hand and keep the detected hand still, the detected hand would be moving around on the screen, reflecting the "moving the paper around" idea that our participant gave.

Thus, having a wheelchair with the controller attached and moveable at the click of a button would give users a lot of freedom to make use of the canvas despite limited arm range.

Users with arthrogryposis , for example, would have limited arm range movement. "Moving the canvas around instead of the hands" is a potential solution for this group of users, and I believe it can benefit users with other conditions and illnesses.

Luckily, Winged also took into account that users might need to be oriented with this setup when they started using the app.

Here is Winged's approach together with approaches that have been done by various engineers in this effort!

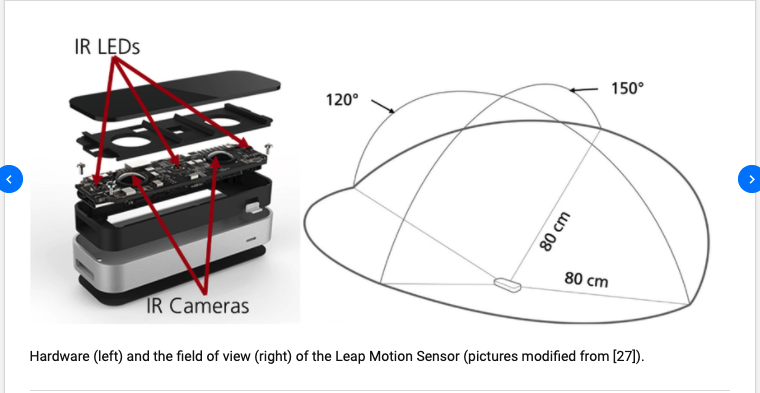

Hardware constraints

While we have many ideas that can aim to the sky, being mindful of current technology's capability is also an important part of our work as a UX designer/engineer.

- There is a constraint to the field of view (FoV) of the leap motion device. Currently, the FoV of the device is typically 150×120°.

- The constraint for the depth (vertical interaction) is between 10cm to 60cm preferred, up to 80cm maximum

Summary - Takeaways

One of the main challenges of the app is to adapt to different disabilities to increase inclusivity towards our users.

Some of the key solutions include:

- Experimenting with different different device placements on wheelchair to better suit users' handicap

- Detect users' hand joints and bone structures to recommend a setting for them

- Using voice command to minimize hand interactions and smoothen the onboarding process

- Let users customize button locations in whichever way that is convenient for them

Further work

I have identified the following tasks to be done to move this project forward:

- As some users suggested, learning from existing assistive technologies and gesture settings of different drawing apps will inform us of the settings/interactions that users have been acquainted with

- Conduct more research into different disabilities and physiological structures of those with hand impairement